I've been going through all the old issues of Robotics Age magazine (on archive.org ) In the March 1984 issue was an editorial titled "Why Toys?" Then, in the letters section was the letter/comment that provoked that editorial. Here is the text of that letter:

In regards to your "Super Armatron" article, why bother with toys?

Gary Hodgson

Heathkit

PO Box 691

Bridgeton, MO 63044

There was another letter following, which took a different point of view to the same article. The editorial and letters struck a nerve...

I was shocked that such an attitude came from anybody, much less from someone apparently employed by Heathkit!

First, a bit about the article. I haven't read it, but the editorial gave enough information to know what it was about. The Armatron was a robotic arm toy sold in the 80s by Radio Shack, among others. It had joystick controls to allow the operator to move the arm and open and close the gripper. It wasn't intended to be anything but that. A lot of experimenters at the time thought initially it was a great, inexpensive way to get a robotic arm to interface to a computer. Alas, it turned out not to be so great. It was a mechanical marvel. It had a single electric motors with all sorts of mechanical gearing and linkages control six different motions with that single motor. But the authors of that article persevered and managed to create a fairly sophisticated computer controlled arm from it.

But that isn't really the point of this post. As much as I like robots and computers, the nerve that was struck was much more fundamental.

Over the last 10,000 or so years, people have created all sorts of wonderful devices. And some not so wonderful. Often it was for some perceived need. But quite often it was rather whimsical, perhaps a toy. Those devices, even the less than great ones, embody a great deal of knowledge, ingenuity, and creativity. Toys are, for the most part, cheap: as cheap as their maker can make them. They are high volume, so money is put up front in engineering to be offset in manufacturing savings. They tend to have a high density of creative engineering -- a significant education in a $30 box!

When I was around ten years old, my dad was replacing the water pump on the family car. I was helping. That mostly meant handing him wrenches and tools: "give me a nine sixteenths." Among all the father-soon benefits of that, I also learned what nine-sixteenths looked like. That's a handy skill on its own. And an unusual one these days.

But the true learning value came when he got the old water pump off. Well, there was the "Advanced Cursing 510" before that... When he got the old water pump off, he handed it to me and said "figure out how it works." Ask a lot of university students how a water pump works, and you'll likely get some really good textbook answers. And some less-good answers. But there is a world of difference between a textbook and a water pump. Not only did I figure out how the impeller worked, but I also learned about the clearances needed, bearings, bushings, seals, gaskets, and pulleys. That was one of the most profitable hours of my education. I even revisited that old water pump several times. I kept it a long time, and continued to learn from it.

So, to the point, we can learn an awful lot that is really useful by taking things apart. Even better if we can put them back together and make them work again. But often these "educational deconstructions" lead to a non-working device afterward. Enter toys. As I mentioned, toys often have some amazing feats of engineering hidden beneath the plastic skin. And they're usually pretty cheap. So an educational foray into the innards of a toy might not end with a large financial loss. It can be a pretty cheap engineering education. Take it apart; figure out how all the parts work together; determine why each part is needed and what it does; wonder, "why did they do it this way instead of some other?"

And, you don't even need to buy new toys. You can find plenty of discarded broken toys. Finding out why it broke is more engineering education. Failure analysis is a huge part of engineering. Goodwill and thrift shops often have interesting toys for cheap. Radio control cars without the controller aren't much good for anything else, but they provide a wealth of knowledge under the screwdriver. Dollar stores, such as Dollar Tree, often have things like pull-back cars; you roll them backward and let them go and they race forward. Open one of these sometime. Better yet, have your kid open one. Even better, buy two. Have your kid take one apart and try to put it back together. Chances are the first one won't go back together right. But then the kid gets a second try. The positive feedback of how much they learned on the "failure" is great. And if they get the second one operational again, that's an amazing confidence booster.

And they'll be in really good company. Albert Einstein took apart clocks as a kid. He even got some back together. Worked out pretty well for him!

I studied physics in college. I was surrounded by incredibly brilliant young people. They were simply amazing at doing physics and math -- on paper. But ask them to build a simple electronic circuit they had just designed. I was utterly shocked at how difficult it was for these super-brilliant people to do simple hands-on, practical work. I suppose they never took their toys apart!

Continuous Ops

Sunday, September 1, 2019

Tuesday, August 13, 2019

V/R

Four years? Really? It doesn't seem like four years since my last post. Oh, well. Guess I better get on with my tirade.

How many times have you gotten an email signed like this?

V/R

John Smith

Maybe you know what V/R stands for, or maybe you don't. That's part of the problem. But the real irony is what it DOES stand for: Very Respctfully!

I ask you, how respectful is it to be so lazy you can't type out the words? "Gee, I really respect you but not enough to type out the words saying so." Wins me over every time.

How many times have you gotten an email signed like this?

V/R

John Smith

Maybe you know what V/R stands for, or maybe you don't. That's part of the problem. But the real irony is what it DOES stand for: Very Respctfully!

I ask you, how respectful is it to be so lazy you can't type out the words? "Gee, I really respect you but not enough to type out the words saying so." Wins me over every time.

Friday, August 28, 2015

Some Pointers

The C programming language has a lot of neat features. It simultaneously allows you to write programs at a fairly high level of abstraction and access the machine at the lowest level, just like assembly language. The term "high level assembly language" has been used to describe it. Programmers don't like it when the language gets in the way of what they are trying to do. C rarely gets in the way of anything low-level, unlike most other high-level languages. In fact, it rarely gets in the way of much of anything. C allows you to do about anything you want. Modern ANSI standard C has made it a bit more difficult, but safer, to do those things. But the flavor is still there. Being able to do anything you want will quickly get you into trouble. ANSI C still allows it, but posts warning signs that you must acknowledge first. Ignore them at your own risk. This blog is about what happens when you ignore them without fully thinking that through.

Earlier this week I was asked to review some embedded interrupt code. Code reviews are a mixed blessing: they are extremely useful and important, but you end up telling others how to do their job. You can also pick up some new techniques by reviewing others' code, too, which is good. Enough about code reviews. Perhaps a later blog about them. But this particular review prompted me to write this post.

A "pointer" in a programming language is simply a variable that holds, instead of a useful value, an address of a piece of memory that (usually) holds the useful value. You might need to think about that for a few seconds. Pointers are one of the hardest things for many people to grasp. A variable is normally associated with some value of some data type. For instance in C:

int x;

declares a variable that will hold an integer. Simple enough. Or this one, which declares an array of ten double values and puts those values into it:

double arr[10] = { 0.0, 1.1, 2.2, 3.3, 4.4, 5.5, 6.6, 7.7, 8.8, 9.9};

By using the name of the variable, you get the value associated with it:

x = 5;

y = x; // y now has the value 5 copied from x

But pointers are different. A processor has a bunch of memory that can hold anything. Think of the memory as an array of bytes. That memory has no defined data type: it is up to the program to determine what value of what type goes where. A pointer is an index of that array. The pointer just "points into" the array of raw memory somewhere. It is up to the programmer to decide what kind of value gets put into or taken out of that memory location. This concept pretty much flies in the face of good programming as we have learned it over the last 50 years. It breaks the abstraction our high level language is designed to give us in the interest of good, safe programming. Having a data type associated with a variable is important to good programming. It gives the compiler the chance to check the data types used to make sure what you are doing makes sense. If you have this code:

double d = 3.1415926538;

int i = d;

The compiler won't let you do that. It knows you have a double value in d which cannot be assigned to an integer variable. Allowing that would probably cause a nasty bug that would be difficult to find.

Pointers are extremely powerful and useful. But they are very dangerous. Almost every language has pointers in one form or another. But most of the "improvements" over C have been to make pointers safer. Java and C# claim to not have pointers and replace them with "references" which are really just a safer, more abstract form of pointers under the hood. Even C has tried to make pointers safer. The ANSI standard added some more type checking features to C that had been missing. In C, you declare a pointer like this:

int *p_x; // declare a pointer to an integer

The "*" means "pointer to." The variable name, p_x, holds a pointer to an integer: just the address of where the integer is stored. If you assign the variable to another, you get a copy of the address, not the value:

p_y = p_x; // p_y now holds the same address of an integer, not an integer.

To get the value "pointed to" you have to "dereference" the pointer, but using the same "*" operator:

int x = *p_x; // get the value pointed to by p_x into x

The p_x tells the compiler "here is an address of an integer" and the "*" tells it to get the integer stored there. What we are doing is assigning a data type of "pointer to int" to the pointer. The following code won't compile:

double *p_d;

int *p_i;

p_i = p_d; // assign address of double to address of int: ERROR

The compiler sees that you are trying to assign the address of a double value to the pointer declared to point to an int. That is an error. It gives us a little safety net under our pointers. There are plenty of circumstances this won't help, but it is a start.

However, C doesn't want to get in our way. From the start, C allowed the programmer to do about anything. ANSI C didn't change that much, but did make it necessary to tell the compiler "I know what I am doing here is dangerous, but do it anyway." Sometimes we want to have a pointer to raw memory without having a data type attached to that memory. In C we can do that. ANSI C defined the "void" data type for just such things. The void data type means, essentially, no data type, just raw memory:

void * p_v; // declare a "void pointer" that points to raw memory

This can be quite handy, like a stick of dynamite.

You can assign any type of pointer to or from a void pointer, but the rules are a bit strange. As dangerous as pointers are, void pointers are even more so. Once a pointer is declared as void, the compiler has NO control over what it points to, and so can't check anything you do with that pointer! If there were no restrictions on assigning void pointers we could do this:

double *p_d; // declare a pointer to a double

*p_d = 3.1415; // put 3.1415 into the memory pointed to by p_d

void *p_v; // declare a pointer to void

p_v = p_d; // assign address of double in p_d to address of void in p_v

int *p_i; // declare p_i as a pointer to an integer

*p_i = *p_v; // copy the value pointed to by p_v (a double!) to the integer pointed to by p_i

The compiler couldn't stop us and has no way of checking that the values make sense. It will cheerfully copy the memory pointed to by p_d and p_v into the memory pointed to by p_i, which should be an integer. Since floating point (double) values and integer values are stored in different formats, that will NOT be what we want! We now have a nasty bug. Fortunately, the designers of C made it so the above won't compile. The void pointer must be "cast" to a pointer of the appropriate type before the assignment can be made. Tell the compiler "I know what I'm doing" if that is really what you want to do.

But we have only scratched the surface. Pointers point to memory. What all can be in memory? Well, just about anything. Where does the computer hold your code? Yep, in memory!

Your code is held in memory, just like your data. A pointer points to memory. So a pointer can point to code. Not all languages give you the opportunity to take advantage of that, but of course C does.

And, of course, it comes with a long list of dangers. You can declare a "pointer to a function" that can then be "dereferenced" to call the function pointed to. That is incredibly powerful! You can now have a (pointer) variable that points to any function desired and call whatever function it is assigned. One example, which is used in the C standard library, is a sorting function. YOu can write the "world's best" sort function, but it normally will only sort one data type. You will have to rewrite it for each and every data type you might want to sort. But, the algorithm is the same for all data types. The only difference is how to compare whether one value is greater than the other. So, if you write the sort function to take a pointer to a function that compares the data type, returning an indication of whether one is greater than the other, you only have to write the comparison for each type. Pass a pointer to that function when you call the sort function and you don't have to rewrite the sort function ever again!

And, of course, it comes with a long list of dangers. You can declare a "pointer to a function" that can then be "dereferenced" to call the function pointed to. That is incredibly powerful! You can now have a (pointer) variable that points to any function desired and call whatever function it is assigned. One example, which is used in the C standard library, is a sorting function. YOu can write the "world's best" sort function, but it normally will only sort one data type. You will have to rewrite it for each and every data type you might want to sort. But, the algorithm is the same for all data types. The only difference is how to compare whether one value is greater than the other. So, if you write the sort function to take a pointer to a function that compares the data type, returning an indication of whether one is greater than the other, you only have to write the comparison for each type. Pass a pointer to that function when you call the sort function and you don't have to rewrite the sort function ever again!

It should be fairly obvious that the compare function will need to accept certain parameters so the function can call it properly. The sort function might look something like this:

void worlds_best_sort(void *array_to_sort, COMPARE_FN *greater)

{

// some code

if ( greater (&array_to_sort[n], &array_to_sort[n+1]) ....

// some more code

}

Inside the sort function a call is made to the function passed in, "greater", to check if one value is greater than the other. The "&" operator means "address of" and gives a pointer to the value on the right. So the greater() function takes two pointers to the values it compares. Perhaps it returns an int, a 1 if the first value is greater than the second, a 0 if not. The pointer we pass must point to a function that takes these same parameters if we want it to actually work. C lets us declare a pointer to a function and describes the function just like any other data type:

int (*compare_fn_p)(void *first, void *second);

That rather confusing line of code declares a pointer to a function named compare_fn_p. The function pointed to will take two void pointers as parameters and return an int. The parentheses around the *compare_fn_p tell the compiler we want a pointer to the function. Without those parentheses the compiler would attach the "*" to the "int" and decide we were declaring a function (not a pointer to a function) that returned a pointer to an int. Yes, it is very confusing. It took me years to memorize that syntax. To be even safer, C allows us to define a new type. We can define all sorts of types and then declare variables of those new types. That lets the compiler check our complex declarations for us and we don't have to memorize the often confusing declarations. If we write this:

typedef int (*COMPARE_FN)( void *, void *);

we tell the compiler to define a type ("typedef") that is a pointer to a function that takes two void pointers as parameters and returns an int. The name of the new type is COMPARE_FN. Notice the typedef is just like the declaration above, except for the word "typedef" in front and the name of the new type is where the function pointer name was before. The name, "COMPARE_FN," is what we used in the parameter list for the sort function above. The sort function is expecting a pointer to a function that takes two void pointers and returns an int. The compiler can check that any function pointer we pass points to the correct type of function, IF WE DECLARE THE FUNCTION PROPERLY! If we write this function:

int compare_int( int * first, int *second);

and pass that to the function, it won't compile because the "function signature" doesn't match.

As you can see, these declarations get messy quick. We could avoid a lot of these messy declarations by using void pointers: raw pointers to whatever. DON'T! ANY pointer, no matter what it points to, can be assigned to a void pointer. It loses all type information when that is done. We could declare our sort routine like this:

void worlds_best_sort(void *array_to_sorted, void *);

Now we can pass a pointer to ANY function, and indeed, any THING, as the parameter to the sort routine. We wouldn't need to mess around with all those clumsy, confusing declarations of function pointer types. But, the compiler can no longer help us out! We could pass a pointer to printf() and the compiler would let us! We could even pass a pointer to an int, which the program would try to run as a function! But by declaring the type of function that can be used with a typdef declaration, we give the compiler the power to check on what we give it. The same is true for other pointers, which can be just as disastrous.

I think the lesson here is that the C standard committee went to great lengths to graft some type checking onto a language that had very little, and still maintain compatability. They also left the programmer with the power to circumvent the type checking when needed, but it is wise to do that only when absolutely necessary. As I saw in the code review earlier this week, even profession programmers who have been doing it a long time get caught up with types and pointers. If you aren't very familiar with pointers I urge you to learn about them: they are one of the most powerful aspects of C. Whether you are new to pointers and/or programming or you have been at it for years, I even more strongly urge you to use the type checking given to us by the creators of C to make your pointers work right. Pointers, by nature, are dangerous. Let's make them as safe as we can.

Sunday, August 16, 2015

Let's Go P Code!

For those who have read a lot of my writing it won't be a shock that I'm not an Arduino fan. I have also made it clear that I don't think C, C++, or the Arduino bastardization of both are the right language(s) for the Arduino target user. When I preach these things the question is always asked, "what is the alternative?" My response is usually something like "I don't have a good one. We need to work on one." Today I had somewhat of a fledgling idea. It is far from complete, but it may be a path worthy of following. Let me explain.

If you are familiar with Java, you probably know that it is a compiled / interpreted language. That sentence alone will fire up the controversy. But the simple fact is, Java compiles to a "bytecode" rather than native machine language, and "something" has to translate (interpret, usually) that bytecode to native machine code at some point to do anything useful. What you may not know is that Java was designed originally to be an embedded language that was an improvement, but somewhat based on, C++. I'm not fond of Java for several reasons, but it does indeed have some advantages over C and C++. However, for small embedded systems, like a typical Arduino, it still isn't the right language. And, though it has some improvements, it still carries many of the problems of C and C++. It also isn't really all that small as we would desire for a chip running at 20 MHz or less with 32K of program memory.

But for this exercise, let's look at some of the positives of Java. That interpreted bytecode isn't all that inefficient. It is much better than, say, the BASIC interpreter on a Picaxe. And the language is lightyears beyond Picaxe. (Picaxe and BASIC stamps and the like should result in prison sentences for their dealers!) Maybe we have a slowdown of 2 to 4 times as typical. For that, we get the ability to run the (almost) exact same program on a number of processors. All that is needed is a bytecode interpreter for the new platform. We also get fast, relatively simple, standardized compilers that can be pretty good and helpful at the same time. And we can use the same one(s) across the board. The bytecode interpreter is rather simple and easy to write. Much simpler than porting the whole compiler to a new processor. And the way the code runs is standardized whether you are using an 8, 16, 32, or 64 bit processor: an int is always 32 bits.

So Java isn't the right language, but it has some good ideas. Let's step back in time a bit before Java. James Gosling, the inventor of Java, has said much of the idea of Java came from the UCSD P system. Now, unless you have been in the computer field as long as I have or you are a computer history buff, you have probably never heard of the UCSD P system. To get they story we need to step back even further. Set your DeLorean Dash for 1960 and step on the gas.

Around 1960, high level languages were pretty new. Theories used in modern compilers were only starting to be developed. But some people who were very forward thinking realized that FORTRAN and COBOL, the two biggest languages of the day, were the wrong way to go. They created a new, structured, programming language called Algol 60. Most modern languages (C, C++, Java, Objective C, Python, etc.) trace much of their design back to Algol 60. Although it was never very popular in the USA, it did gain some ground in Europe. but it was developed early in the game when new ideas were coming about pretty much daily. It wasn't long before a lot of computer scientists of the day realized it needed to be improved. So they started to develop what would become Algol 68, the new and improved version. One of the computer scientists involved wanted to keep it simple like the original. Niklaus Wirth pushed for a simple but cleaner version of the original Algol 60 (well, there was an earlier Algol 58, but it had and has very little visibility.) Wirth proposed his Algol-W as a successor to Algol 60, but the Algol committee rejected that and went in line with the thinking of the day: more features are better. What they created as Algol 68 was a much larger and more complex language that many Algol lovers (including Wirth) found distasteful. Wirth was a professor of computer science at ETH Zurich and wanted a nice, clean, simple language to use to teach programming without the complexities and dirtiness of the "real world." He, for whatever reasons, decided not to use any of the Algol languages and created a new language, partly in response. He called it Pascal (after Blaise Pascal, a mathematician.)

Pascal is a simple and very clean language, although in original form it lacks many features needed to write "real" programs. But it served well for teaching. The first compiler was written for the CDC 6000 line of computers. But soon after a "portable" compiler was created that produced "machine" language for a "virtual" computer that didn't really exist. Interpreters were written for several different processors that would interpret this "P code" and run these programs. Aha! We have a single compiler that creates the same machine language to run on any machine for which we write a P Code interpreter! But Pascal is a much smaller and simpler language, with much better facilities for beginning programmers than Java. It was, after all, designed by a computer science professor specifically to teach new programmers how to write programs the "right" way! Pascal, and especially the P Code compiler, became quite popular for general use. Features to make it more appropriate for general use were added, often in something of an ad-hoc manner. Nevertheless, it became quite popular and useful. Especially with beginning programmers or people teaching beginning programmers.

Step now to University of California at San Diego. Dr. Kenneth Bowles was running the computer center there and trying to build a good system to teach programming. He came up with the idea of making a simple system that could run on many different small computers rather than one large computer that everyone shared. He found the Pascal compiler and it's P Code, and started to work. He and his staff/students created an entire development system and operating system based on P Code and the Pascal compiler. It would run on many of the early personal computers and was quite popular. IBM even offered it as an alternative to PC-DOS (MS-DOS) and CP/M. I even found a paper on the web from an NSA journal describing how to get started with it. Imagine writing one program and compiling it, then being able to run that very same program with no changes on your Commodore Pet, your Apple ][, your Altair running CP/M, your IBM PC, or any number of other computers. Much as Java promises today, but in 64K and 1 or 2 MHz!

Pascal itself has many advantages over C for new programmers. C (and descendents) have many gotchas that trip up even experienced programmers on a regular basis. Pascal was designed to protect from many of those. The language Ada, devised for the US Department of Defense and well regarded when it comes to writing "correct" programs, was heavily based on Pascal. There are downsides to Pascal, but they are relatively minor and could be rather easily overcome. Turbo Pascal, popular in the early 80s on IBM PCs and CP/M machines (and one of the main killers of UCSD P System) had many advances that took away most of the problems of Pascal.

So here is the idea. Write P Code interpreters for some of the small micros available, like Arduino. Modernize and improve the UCSD system, especially the Pascal compiler. Create a nice development system that allows Pascal to be written for most any small micro. Many of the problems of C, C++, and Arduino go away almost instantly. The performance won't be as good as native C produced machine language, but close enough for most purposes. Certainly much better than other interpreted language options. Niklaus Wirth went on to create several succussors to Pascal, including the very powerful Modula 2 and Oberon. Modula 2 was implemented early on with M code, similar to P code. He even built a machine (Lilith) that ran M Code directly. If Pascal isn't right, perhaps Modula 2 would be.

In any case, I think this is a path worth investigating. I plan to do some work in the area. I would be very interested in hearing what you think about the idea.

Oh! And what about the title of this blog post? Well, the P Code part should be fairly obvious at this point. But the rest of it may not be. My alma mater, Austin Peay State University, has a football fight slogan of "Let's Go Peay!" I love that slogan, so I thought it would fit well with this idea.

Let's go P Code!

If you are familiar with Java, you probably know that it is a compiled / interpreted language. That sentence alone will fire up the controversy. But the simple fact is, Java compiles to a "bytecode" rather than native machine language, and "something" has to translate (interpret, usually) that bytecode to native machine code at some point to do anything useful. What you may not know is that Java was designed originally to be an embedded language that was an improvement, but somewhat based on, C++. I'm not fond of Java for several reasons, but it does indeed have some advantages over C and C++. However, for small embedded systems, like a typical Arduino, it still isn't the right language. And, though it has some improvements, it still carries many of the problems of C and C++. It also isn't really all that small as we would desire for a chip running at 20 MHz or less with 32K of program memory.

But for this exercise, let's look at some of the positives of Java. That interpreted bytecode isn't all that inefficient. It is much better than, say, the BASIC interpreter on a Picaxe. And the language is lightyears beyond Picaxe. (Picaxe and BASIC stamps and the like should result in prison sentences for their dealers!) Maybe we have a slowdown of 2 to 4 times as typical. For that, we get the ability to run the (almost) exact same program on a number of processors. All that is needed is a bytecode interpreter for the new platform. We also get fast, relatively simple, standardized compilers that can be pretty good and helpful at the same time. And we can use the same one(s) across the board. The bytecode interpreter is rather simple and easy to write. Much simpler than porting the whole compiler to a new processor. And the way the code runs is standardized whether you are using an 8, 16, 32, or 64 bit processor: an int is always 32 bits.

So Java isn't the right language, but it has some good ideas. Let's step back in time a bit before Java. James Gosling, the inventor of Java, has said much of the idea of Java came from the UCSD P system. Now, unless you have been in the computer field as long as I have or you are a computer history buff, you have probably never heard of the UCSD P system. To get they story we need to step back even further. Set your DeLorean Dash for 1960 and step on the gas.

Around 1960, high level languages were pretty new. Theories used in modern compilers were only starting to be developed. But some people who were very forward thinking realized that FORTRAN and COBOL, the two biggest languages of the day, were the wrong way to go. They created a new, structured, programming language called Algol 60. Most modern languages (C, C++, Java, Objective C, Python, etc.) trace much of their design back to Algol 60. Although it was never very popular in the USA, it did gain some ground in Europe. but it was developed early in the game when new ideas were coming about pretty much daily. It wasn't long before a lot of computer scientists of the day realized it needed to be improved. So they started to develop what would become Algol 68, the new and improved version. One of the computer scientists involved wanted to keep it simple like the original. Niklaus Wirth pushed for a simple but cleaner version of the original Algol 60 (well, there was an earlier Algol 58, but it had and has very little visibility.) Wirth proposed his Algol-W as a successor to Algol 60, but the Algol committee rejected that and went in line with the thinking of the day: more features are better. What they created as Algol 68 was a much larger and more complex language that many Algol lovers (including Wirth) found distasteful. Wirth was a professor of computer science at ETH Zurich and wanted a nice, clean, simple language to use to teach programming without the complexities and dirtiness of the "real world." He, for whatever reasons, decided not to use any of the Algol languages and created a new language, partly in response. He called it Pascal (after Blaise Pascal, a mathematician.)

Pascal is a simple and very clean language, although in original form it lacks many features needed to write "real" programs. But it served well for teaching. The first compiler was written for the CDC 6000 line of computers. But soon after a "portable" compiler was created that produced "machine" language for a "virtual" computer that didn't really exist. Interpreters were written for several different processors that would interpret this "P code" and run these programs. Aha! We have a single compiler that creates the same machine language to run on any machine for which we write a P Code interpreter! But Pascal is a much smaller and simpler language, with much better facilities for beginning programmers than Java. It was, after all, designed by a computer science professor specifically to teach new programmers how to write programs the "right" way! Pascal, and especially the P Code compiler, became quite popular for general use. Features to make it more appropriate for general use were added, often in something of an ad-hoc manner. Nevertheless, it became quite popular and useful. Especially with beginning programmers or people teaching beginning programmers.

Step now to University of California at San Diego. Dr. Kenneth Bowles was running the computer center there and trying to build a good system to teach programming. He came up with the idea of making a simple system that could run on many different small computers rather than one large computer that everyone shared. He found the Pascal compiler and it's P Code, and started to work. He and his staff/students created an entire development system and operating system based on P Code and the Pascal compiler. It would run on many of the early personal computers and was quite popular. IBM even offered it as an alternative to PC-DOS (MS-DOS) and CP/M. I even found a paper on the web from an NSA journal describing how to get started with it. Imagine writing one program and compiling it, then being able to run that very same program with no changes on your Commodore Pet, your Apple ][, your Altair running CP/M, your IBM PC, or any number of other computers. Much as Java promises today, but in 64K and 1 or 2 MHz!

Pascal itself has many advantages over C for new programmers. C (and descendents) have many gotchas that trip up even experienced programmers on a regular basis. Pascal was designed to protect from many of those. The language Ada, devised for the US Department of Defense and well regarded when it comes to writing "correct" programs, was heavily based on Pascal. There are downsides to Pascal, but they are relatively minor and could be rather easily overcome. Turbo Pascal, popular in the early 80s on IBM PCs and CP/M machines (and one of the main killers of UCSD P System) had many advances that took away most of the problems of Pascal.

So here is the idea. Write P Code interpreters for some of the small micros available, like Arduino. Modernize and improve the UCSD system, especially the Pascal compiler. Create a nice development system that allows Pascal to be written for most any small micro. Many of the problems of C, C++, and Arduino go away almost instantly. The performance won't be as good as native C produced machine language, but close enough for most purposes. Certainly much better than other interpreted language options. Niklaus Wirth went on to create several succussors to Pascal, including the very powerful Modula 2 and Oberon. Modula 2 was implemented early on with M code, similar to P code. He even built a machine (Lilith) that ran M Code directly. If Pascal isn't right, perhaps Modula 2 would be.

In any case, I think this is a path worth investigating. I plan to do some work in the area. I would be very interested in hearing what you think about the idea.

Oh! And what about the title of this blog post? Well, the P Code part should be fairly obvious at this point. But the rest of it may not be. My alma mater, Austin Peay State University, has a football fight slogan of "Let's Go Peay!" I love that slogan, so I thought it would fit well with this idea.

Let's go P Code!

Saturday, August 8, 2015

Get Out of My Way!

In case you haven't noticed, I am building a PDP-8. Notice I didn't say an emulator or a simulator. I consider what I am doing a new implementation of the PDP-8 architecture. I won't explain that here, but perhaps another post later will explain why I say that. Today, I want to talk a little about how all our modern technology can make things harder, and what to do about it.

The PDP-8 is a very simple machine. That was very much intentional. Although the technology of the day was primitive compared to what we have now, much larger and more complex machines were being built. If you doubt that, do a bit of research on the CDC 6600, the first supercomputer, that arrived about the same time as the PDP-8. Much of what we consider bleeding edge tech was pioneered with that machine. With discrete transistors! But the PDP-8 was different.

The PDP-8 had a front panel with switches and lights that gave the user/programmer direct access to the internals of the CPU. If you really want to know what your computer is doing, nothing is better. You could look at and change all the internal registers. Even those the assembly language programmer normally has no direct control of. You could single step: not only by instruction as with a modern debugger, but also by cycles within an instruction! As useful as the front panel was, it wasn't very convenient for many uses. So a standard interface was a terminal connected to a serial line. The normal terminal of the day was a mechanical teletype. A teletype was much like a typewriter that sent keystrokes over the serial port and printed what was received over the serial port. They were often used as terminals (even on early microcomputers!) well into the 1980s.

The PDP-8 hardware to interface with a teletype was rather simple as was most of its hardware. The I/O instructions of the machine are quite simple and the hardware was designed to match. They teletype hardware had a flag (register bit) to indicate if a character had been received, and another to indicate a character was finished sending. The IOT (input output transfer) instruction could test one of those flags and skip the next instruction if it was set. By following the IOT instruction with a jump instruction back to the IOT, you could have a loop that continuously tested the flag, waiting on a character, until a character was received. When the flag was set indicating the arrival of a character, the jump would be skipped over and the following code would read the incoming character. Simple, neat, and clean. A simple flip flop and a general test instruction allowed a two instruction loop to wait on the arrival of a character.

We want to build a new PDP-8 using a microcontroller. The chosen micro (NXP LPC1114 to start) will have a serial port that is fully compatible with the old teletype interface. Remarkably, that interface was old when the PDP-8 was created, and we still use it basically unchanged today. The interface hardware for serial ports like that today is called a UART (Universal Asynchronous Receiver Transmitter) or some variation of that. Lo and behold, it will have that same flag bit to indicate a recieved (or transmitted) character! Nice and simple. That won't present any problems for our emulation code. But we want to do our main development on a PC using all the power ( and multiple large screens!) to make our lives easier. So, since C language is "portable" and the standard language for embedded (microcontroller) work, we write in C on the PC for most of the work, then move to the micro for the final details. I prefer Linux, whose history goes back almost as far as the PDP-8. Alas, Linux gets in the way.

You see, Linux, like Unix before it, is intended to be a general purpose, multi-tasking, multi-user operating system. In one word, that can be summed up as "abstraction." In a general purpose operating system, you may want to attach any number of things to the computer and call it a terminal. But you only want to write code one way instead of writing it for every different type of terminal differently. So you create an abstract class of devices called "terminal" that defines a preset list of features that all the user programs will use to communicate with whatever is connected as a terminal. Then, the OS (operating system) has "drivers" for each type of device that makes communicating with them the same and convenient for most user programs. Notice the word "most."

Generally, most user programs aren't (or weren't in the early 1970s) interested in knowing if a key had been pressed or when, only getting the character that key represented. So Unix (and by virtue of being mostly a clone of Unix, Linux) doesn't by default make that information available. Instead, you try to read from the terminal and if nothing is available your program "blocks." That means it just waits for a character. That is great in a multi-tasking, multi-user OS: the OS can switch to another program and run that while it waits. But it doesn't work for simulating a PDP-8 hardware: we have no way to simply test for a received character and continue. Our program will stop and control will go to Linux while it waits for a character to be typed.

Now I must digress and mention that Windows does indeed have by default a method to test for a keystroke: kbhit(). The kbhit function in Windows (console mode) is exactly what we need. It returns a zero if no key has been pressed, and non-zero if one has. Windows is a multi-tasking OS similar to Linux, so why does it have kbhit() and Linux doesn't? Not really by design, I assure you, but by default and compatability. Windows grew from DOS, which was a single-tasking, single-user, personal computer OS. DOS was designed much inline with how the PDP-8 was designed. When Windows was added on top of DOS, it had to bring along the baggage. That chafed the Windows "big OS" designers a lot.

Now one of the things that made Unix (and Linux) so popular was that it would allow the programmer (and user) to do most anything that made sense. You may have to work at it a bit, but you can do it. The multitude of creators over the last 45 years have made it possible to get at most low-level details of the hardware in one way or another. I knew I wasn't the first to face this exact problem. The kbhit function is well used. So off to the Googles. And, sure enough, I found a handful of examples that were nearly the same. So I copied one.

//////////////////////////////////////////////////////////////////////////////

/// @file kbhit.c

/// @brief from Thantos on StackOverflow

/// http://cboard.cprogramming.com/c-programming/63166-kbhit-linux.html

///

/// find out if a key has been pressed without blocking

///

//////////////////////////////////////////////////////////////////////////////

#include <stdio.h>

#include <termios.h>

#include <unistd.h>

#include <sys/types.h>

#include <sys/time.h>

#include "kbhit.h"

void console_nonblock(int dir)

{

static struct termios oldt, newt;

static int old_saved = 0;

if (dir == 1)

{

tcgetattr( STDIN_FILENO, &oldt);

newt = oldt;

newt.c_lflag &= ~( ICANON | ECHO);

tcsetattr( STDIN_FILENO, TCSANOW, &newt);

old_saved = 1;

}

else

{

if(old_saved == 1)

{

tcsetattr( STDIN_FILENO, TCSANOW, &oldt);

old_saved = 0;

}

}

}

int kbhit(void)

{

struct timeval tv;

fd_set rdfs;

tv.tv_sec = 0;

tv.tv_usec = 0;

FD_ZERO(&rdfs);

FD_SET(STDIN_FILENO, &rdfs);

select(STDIN_FILENO+1, &rdfs, NULL, NULL, &tv);

return FD_ISSET(STDIN_FILENO, &rdfs);

}

int test_kbhit(void)

{

int ch;

console_nonblock(1); // select kbhit mode

while( !kbhit())

{

putchar('.');

}

ch=getchar();

printf("\nGot %c\n", ch);

console_nonblock(0);

return 0;

}

The PDP-8 is a very simple machine. That was very much intentional. Although the technology of the day was primitive compared to what we have now, much larger and more complex machines were being built. If you doubt that, do a bit of research on the CDC 6600, the first supercomputer, that arrived about the same time as the PDP-8. Much of what we consider bleeding edge tech was pioneered with that machine. With discrete transistors! But the PDP-8 was different.

The PDP-8 had a front panel with switches and lights that gave the user/programmer direct access to the internals of the CPU. If you really want to know what your computer is doing, nothing is better. You could look at and change all the internal registers. Even those the assembly language programmer normally has no direct control of. You could single step: not only by instruction as with a modern debugger, but also by cycles within an instruction! As useful as the front panel was, it wasn't very convenient for many uses. So a standard interface was a terminal connected to a serial line. The normal terminal of the day was a mechanical teletype. A teletype was much like a typewriter that sent keystrokes over the serial port and printed what was received over the serial port. They were often used as terminals (even on early microcomputers!) well into the 1980s.

The PDP-8 hardware to interface with a teletype was rather simple as was most of its hardware. The I/O instructions of the machine are quite simple and the hardware was designed to match. They teletype hardware had a flag (register bit) to indicate if a character had been received, and another to indicate a character was finished sending. The IOT (input output transfer) instruction could test one of those flags and skip the next instruction if it was set. By following the IOT instruction with a jump instruction back to the IOT, you could have a loop that continuously tested the flag, waiting on a character, until a character was received. When the flag was set indicating the arrival of a character, the jump would be skipped over and the following code would read the incoming character. Simple, neat, and clean. A simple flip flop and a general test instruction allowed a two instruction loop to wait on the arrival of a character.

We want to build a new PDP-8 using a microcontroller. The chosen micro (NXP LPC1114 to start) will have a serial port that is fully compatible with the old teletype interface. Remarkably, that interface was old when the PDP-8 was created, and we still use it basically unchanged today. The interface hardware for serial ports like that today is called a UART (Universal Asynchronous Receiver Transmitter) or some variation of that. Lo and behold, it will have that same flag bit to indicate a recieved (or transmitted) character! Nice and simple. That won't present any problems for our emulation code. But we want to do our main development on a PC using all the power ( and multiple large screens!) to make our lives easier. So, since C language is "portable" and the standard language for embedded (microcontroller) work, we write in C on the PC for most of the work, then move to the micro for the final details. I prefer Linux, whose history goes back almost as far as the PDP-8. Alas, Linux gets in the way.

You see, Linux, like Unix before it, is intended to be a general purpose, multi-tasking, multi-user operating system. In one word, that can be summed up as "abstraction." In a general purpose operating system, you may want to attach any number of things to the computer and call it a terminal. But you only want to write code one way instead of writing it for every different type of terminal differently. So you create an abstract class of devices called "terminal" that defines a preset list of features that all the user programs will use to communicate with whatever is connected as a terminal. Then, the OS (operating system) has "drivers" for each type of device that makes communicating with them the same and convenient for most user programs. Notice the word "most."

Generally, most user programs aren't (or weren't in the early 1970s) interested in knowing if a key had been pressed or when, only getting the character that key represented. So Unix (and by virtue of being mostly a clone of Unix, Linux) doesn't by default make that information available. Instead, you try to read from the terminal and if nothing is available your program "blocks." That means it just waits for a character. That is great in a multi-tasking, multi-user OS: the OS can switch to another program and run that while it waits. But it doesn't work for simulating a PDP-8 hardware: we have no way to simply test for a received character and continue. Our program will stop and control will go to Linux while it waits for a character to be typed.

Now I must digress and mention that Windows does indeed have by default a method to test for a keystroke: kbhit(). The kbhit function in Windows (console mode) is exactly what we need. It returns a zero if no key has been pressed, and non-zero if one has. Windows is a multi-tasking OS similar to Linux, so why does it have kbhit() and Linux doesn't? Not really by design, I assure you, but by default and compatability. Windows grew from DOS, which was a single-tasking, single-user, personal computer OS. DOS was designed much inline with how the PDP-8 was designed. When Windows was added on top of DOS, it had to bring along the baggage. That chafed the Windows "big OS" designers a lot.

Now one of the things that made Unix (and Linux) so popular was that it would allow the programmer (and user) to do most anything that made sense. You may have to work at it a bit, but you can do it. The multitude of creators over the last 45 years have made it possible to get at most low-level details of the hardware in one way or another. I knew I wasn't the first to face this exact problem. The kbhit function is well used. So off to the Googles. And, sure enough, I found a handful of examples that were nearly the same. So I copied one.

//////////////////////////////////////////////////////////////////////////////

/// @file kbhit.c

/// @brief from Thantos on StackOverflow

/// http://cboard.cprogramming.com/c-programming/63166-kbhit-linux.html

///

/// find out if a key has been pressed without blocking

///

//////////////////////////////////////////////////////////////////////////////

#include <stdio.h>

#include <termios.h>

#include <unistd.h>

#include <sys/types.h>

#include <sys/time.h>

#include "kbhit.h"

void console_nonblock(int dir)

{

static struct termios oldt, newt;

static int old_saved = 0;

if (dir == 1)

{

tcgetattr( STDIN_FILENO, &oldt);

newt = oldt;

newt.c_lflag &= ~( ICANON | ECHO);

tcsetattr( STDIN_FILENO, TCSANOW, &newt);

old_saved = 1;

}

else

{

if(old_saved == 1)

{

tcsetattr( STDIN_FILENO, TCSANOW, &oldt);

old_saved = 0;

}

}

}

int kbhit(void)

{

struct timeval tv;

fd_set rdfs;

tv.tv_sec = 0;

tv.tv_usec = 0;

FD_ZERO(&rdfs);

FD_SET(STDIN_FILENO, &rdfs);

select(STDIN_FILENO+1, &rdfs, NULL, NULL, &tv);

return FD_ISSET(STDIN_FILENO, &rdfs);

}

int test_kbhit(void)

{

int ch;

console_nonblock(1); // select kbhit mode

while( !kbhit())

{

putchar('.');

}

ch=getchar();

printf("\nGot %c\n", ch);

console_nonblock(0);

return 0;

}

That's an awful lot of code to expose a flip-flop that is already there. But such is the nature of abstraction. Having a powerful OS in charge of such low-level matters is the right way to go. But all too often it makes doing simple things difficult, if not impossible. I'm glad that the creators of Unix and Linux gave me the option to get the OS out of the way

Saturday, July 25, 2015

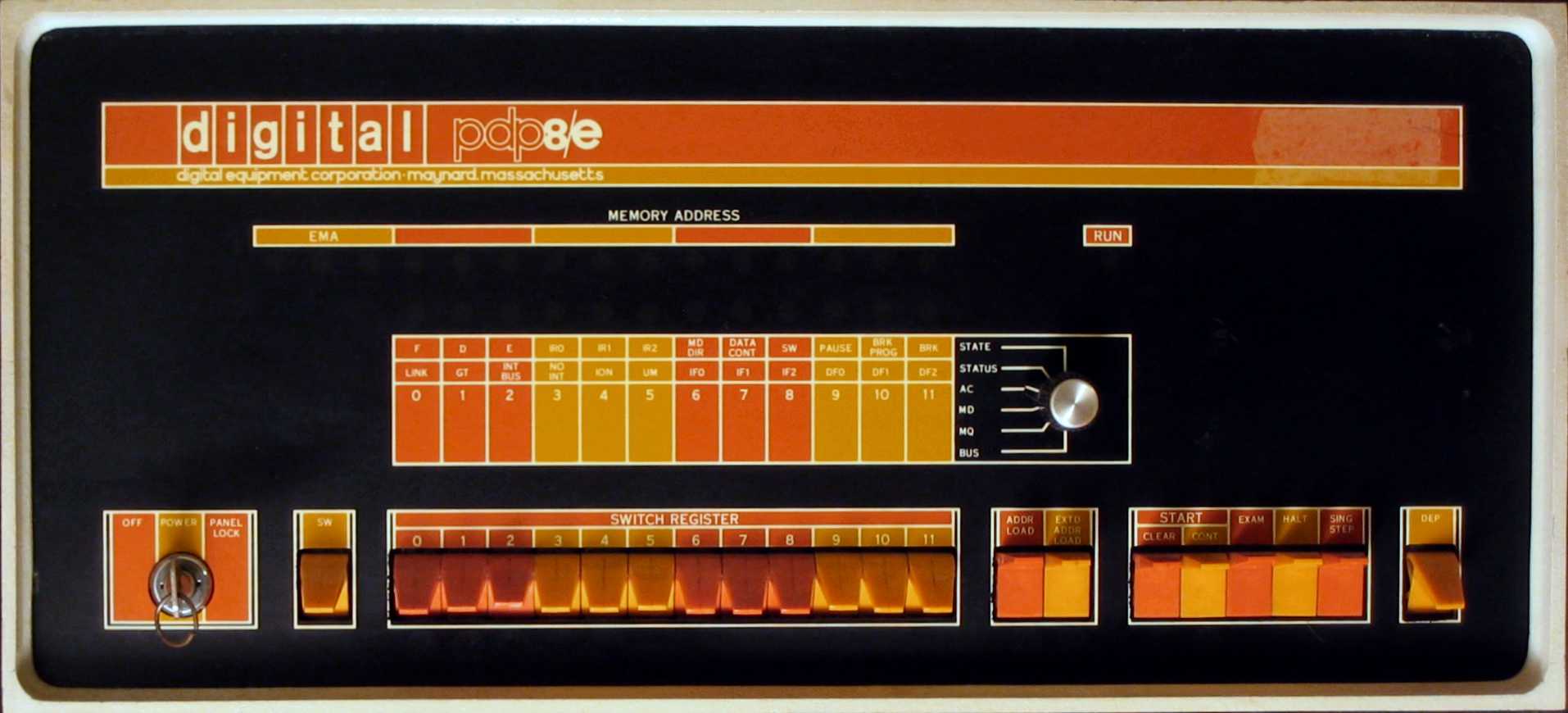

How to Build a 1965 Computer in 2015

In my last post I introduced my latest project, a replica of the PDP-8e. I have been quite busy doing research and writing code. The code is the easy part! The hardest part is making it look authentic. But, thankfully, I am not the only crazy person out there that wants to build a fifty year old computer. There seem to be quite a few of us. A few stand out. Oscar at Obsolescence Guaranteed has done much of what I'm working on. His approach is a bit different. First, he has re-created an 8i instead of an 8e. Second, he was smart and used an emulator already written and hardware already built to make much of his reproduction. He has done a great project and created a kit. I highly recommend you check it out.

Oscar has also done a lot of research that will help me. Fonts and colors for the front panel, for instance. Have you ever thought about how to go about reproducing a replica of a 50 year old piece of equipment and have all the colors and letters look just right?

There is also Spare Time Gizmos with the SBC 6120. That project/product uses The Harris 6120 PDP-8 microprocessor as the CPU for a sort-of genuine PDP-8. Alas, the 6120 and its predecessor the 6100 are rarer than gold-plated hens' teeth. But it's a really cool project with lots of good information and inspiration, much like PiDP-8 from Oscar.

Our approach will be a bit different. The front panel will be as authentic as I can reasonably make it, in looks and operation. So will the CPU, with a bit of a twist. I don't really want to build a few boards of TTL logic to create the CPU. The 6100 and 6120 are really hard to find and expensive when you do find one. Replacements and spares are just as rare and expensive. An FPGA would be good, but my verilog and VHDL skills as well as fine pitch SMD soldering skills aren't up to it yet. Not to mention I want to make this easily reproducible for others. So I have decided to take a 21st century 32 bit microcontroller with flash and RAM onboard and nice input output devices and create a new PDP-8 on a chip.

The PDP-8 instruction set is really simple. There are eight basic instructions. Two of those, Operate and IO Transfer can actually have several "microprogrammed" instructions to do various operations. But overall, it's quite simple. To emulate the instructions, at least two approaches can be used. First, you can write code that simply ends up with the same result as each instruction. That's fine for most uses and tends to be more efficient. Let the new processor work the way it is intended and get the results you need. As long as the final state at the end of each instruction is exactly what it would be with the real computer, everything is fine. No matter how you got there. The other way, though, is more suitable to our purpose.

What we are going to do is essentially emulate the same hardware as used in the PDP-8 in our program. It will, of course, end up with the same results. But how we get there matters. The PDP-8 cpu has three "major states" with four clock cycles in each major state. Our code will follow that, doing the same processing in each state and cycle as the real hardware does. It won't be as efficient, but since we are running a very fast 32 bit modern processor to emulate a 12 bit system from 50 years ago, it should be plenty fast. This begs the question, "why?"

The answer simple: we want to build an entire PDP-8, not just a program that emulates one. The real PDP-8 can be stepped through individual cycles and the front panel show what is inside the CPU at each step. We can only do that if we follow the same steps. Plus, if we decide to add an external bus to add peripherals (like the Omnibus of the real PDP-8e) we will need those individual cycles to make the real hardware respond properly.

The first step is to write the emulator program and put it into a microcontroller. I have started with a NXP LPC1114. I have them and I'm familiar with them and they are plenty powerful enough to make this work. They come in a 28 pin DIP package for easy prototyping. The biggest downfall is the DIP version only has 4K Bytes of RAM, so we won't be able to emulate even a full 4K word PDP-8. I think I can fit 2K words in it, though. And that should be fun to play with. Eventually I will use a somewhat larger chip that can hold the entire 4K words or maybe even the full blown 32K words. The plan is to create a chip that has a fixed program inside in the flash that emulates a real PDP-8 as accurately as possible. I will add the front panel hardware and any other IO devices to that. Others can take the same chip, much as they might with a (hard to get) 6100 or 6120 chip, and use it as the CPU for their own PDP-8!

Currently, the CPU emulation is about 90% coded. Once the basic coding is complete, testing for real will begin. I've run a couple simple programs on it so far and the results are encouraging. There is still a lot of work to do. Mostly IO stuff. But I hope to soon be able to post the initial version of a 21st century PDP-8 cpu chip. Get your soldering irons warm and take that paper tape reader out of storage!

picture from http://vandermark.ch/pdp8/index.php?n=Hardware.Processor

Oscar has also done a lot of research that will help me. Fonts and colors for the front panel, for instance. Have you ever thought about how to go about reproducing a replica of a 50 year old piece of equipment and have all the colors and letters look just right?

There is also Spare Time Gizmos with the SBC 6120. That project/product uses The Harris 6120 PDP-8 microprocessor as the CPU for a sort-of genuine PDP-8. Alas, the 6120 and its predecessor the 6100 are rarer than gold-plated hens' teeth. But it's a really cool project with lots of good information and inspiration, much like PiDP-8 from Oscar.

Our approach will be a bit different. The front panel will be as authentic as I can reasonably make it, in looks and operation. So will the CPU, with a bit of a twist. I don't really want to build a few boards of TTL logic to create the CPU. The 6100 and 6120 are really hard to find and expensive when you do find one. Replacements and spares are just as rare and expensive. An FPGA would be good, but my verilog and VHDL skills as well as fine pitch SMD soldering skills aren't up to it yet. Not to mention I want to make this easily reproducible for others. So I have decided to take a 21st century 32 bit microcontroller with flash and RAM onboard and nice input output devices and create a new PDP-8 on a chip.

The PDP-8 instruction set is really simple. There are eight basic instructions. Two of those, Operate and IO Transfer can actually have several "microprogrammed" instructions to do various operations. But overall, it's quite simple. To emulate the instructions, at least two approaches can be used. First, you can write code that simply ends up with the same result as each instruction. That's fine for most uses and tends to be more efficient. Let the new processor work the way it is intended and get the results you need. As long as the final state at the end of each instruction is exactly what it would be with the real computer, everything is fine. No matter how you got there. The other way, though, is more suitable to our purpose.

What we are going to do is essentially emulate the same hardware as used in the PDP-8 in our program. It will, of course, end up with the same results. But how we get there matters. The PDP-8 cpu has three "major states" with four clock cycles in each major state. Our code will follow that, doing the same processing in each state and cycle as the real hardware does. It won't be as efficient, but since we are running a very fast 32 bit modern processor to emulate a 12 bit system from 50 years ago, it should be plenty fast. This begs the question, "why?"

The answer simple: we want to build an entire PDP-8, not just a program that emulates one. The real PDP-8 can be stepped through individual cycles and the front panel show what is inside the CPU at each step. We can only do that if we follow the same steps. Plus, if we decide to add an external bus to add peripherals (like the Omnibus of the real PDP-8e) we will need those individual cycles to make the real hardware respond properly.

The first step is to write the emulator program and put it into a microcontroller. I have started with a NXP LPC1114. I have them and I'm familiar with them and they are plenty powerful enough to make this work. They come in a 28 pin DIP package for easy prototyping. The biggest downfall is the DIP version only has 4K Bytes of RAM, so we won't be able to emulate even a full 4K word PDP-8. I think I can fit 2K words in it, though. And that should be fun to play with. Eventually I will use a somewhat larger chip that can hold the entire 4K words or maybe even the full blown 32K words. The plan is to create a chip that has a fixed program inside in the flash that emulates a real PDP-8 as accurately as possible. I will add the front panel hardware and any other IO devices to that. Others can take the same chip, much as they might with a (hard to get) 6100 or 6120 chip, and use it as the CPU for their own PDP-8!

Currently, the CPU emulation is about 90% coded. Once the basic coding is complete, testing for real will begin. I've run a couple simple programs on it so far and the results are encouraging. There is still a lot of work to do. Mostly IO stuff. But I hope to soon be able to post the initial version of a 21st century PDP-8 cpu chip. Get your soldering irons warm and take that paper tape reader out of storage!

Thursday, July 16, 2015

A Mini Project -- BDK-8e

Today we take personal computers for granted. They are everywhere. But of course that wasn't always true. In the 1950s a "small" computer would fill a room and typically take as much power as a household (or more!) A few lucky people got to work on small machines, like the IBM 650 where they had free and total access to the machine for a time. But mostly the machines were too big and expensive to allow one person total access. They were typically locked away and the only access was by passing a deck of program cards to an operator with your new program to run and getting a printout back sometime later. If there was an error in your program you had to start over.

Then the transistor got common and (relatively) cheap, and like skirts at the same time, computers started getting smaller. Digital Equipment Corporation (DEC) was founded in 1957 with the intention of making computers. The founders had just come from MIT and Lincoln Labs where they had played important roles in developing some early small computers. But the venture capitalists wouldn't let them build computers right away, insisting they build logic modules like they had used in the earlier computers. It was perceived that the market was too small, but the logic modules were in demand.

After two years they finally built their first computer, the PDP-1, using those same logic modules. Indeed, the logic modules sold well and were used in several DEC computers. The name PDP comes from Programmed Data Processor; again, the venture capitalists thought the term computer was too menacing.

The PDP-1 was well received. It was much more affordable than other computers at only $120,000. Most others cost $1,000,000 or more. But more importantly it was "friendly." A user could sit down at it and have it to himself (women were rare.) It had some interesting devices attached, like a video monitor, that could be used for nifty stuff. Perhaps the first video game, Spacewar, was created on the PDP-1.

With this first success, DEC moved forward with a series of new machines. Inspired by their own success and some other interesting machines, especially the CDC-160 and the Linc, they decided to build a really small and inexpensive machine: the PDP-8. The PDP-8 was a 12 bit computer, where all their previous machines except the PDP-5 were 18 bits. It would be another four or five years before making the word size a power of 2 (8, 16, 32 bits) would become common. Computer hardware was expensive and a minimum number of bits would cut the cost dramatically. It also created limitations. But the PDP-8 was the right machine at the right time, probably from the right company. It sold for a mere $18,000. Up to then a production run of 100 machines was considered a success. The PDP-8 would go on to sell around 300,000 over the next fifteen to twenty five years.

The original PDP-8 led to successors. The PDP-8L (lower cost), the PDP-8S (serial), the PDP-8I (integrated circuits), and then the PDP-8e. There were more models after that, but it seems the PDP-8e was the defining machine. It was considerably higher performance than its predecessors and much smaller. It was offered in a tabletop cabinet that would fit on a researcher's lab table. Many were used for just such purposes. It was more reliable as well, being made from newer Medium Scale Integration (MSI) integrated circuits that fit many more functions on a chip. Reliability is directly linked to the number of parts and pins used, and the 8e made both much smaller. There were very few new features added to later models. The 8e was in some ways the epitome of the 8 series. To some degree it defined the minicomputer class.

As I mentioned, the 8 was a 12 bit computer. That sounds odd today, but wasn't then. Twelve bit, 18 bit, and 36 bit computers were rather common, and there was even a 9 bit machine that was one of the inspirations for the PDP-8. Twelve bits allowed it to directly address 4 Kilowords of RAM ( 4096 12 bit words, or 6 Kilobytes.) That was the normal amount sold with the PDP-8. It was magnetic core memory and horrendously expensive. With a memory controller it could be expanded to 32 KWords. The processor recognized eight basic instructions: AND, TAD (two's complement add), DCA (deposit and clear accumulator), ISZ (increment and skip on zero), JMP (jump to new address), JSR (jump to a subroutine and save the return address), IOT (input output transfer), and OPR (operate). The last one is really interesting and is actually a whole family of instructions that didn't access memory directly. OPR was "microprogrammed" meaning that several bits in the instruction had specific meanings and caused different operations to happen. They could be combined to effectively execute several operations with one instruction.

So the PDP-8 was a very interesting, if minimal, machine. And it was very popular and very important in the history of computers. Many individuals collect them and keep them. Alas, I do not have one. Some people have re-created them either from original schematics or by using FPGAs or the 6100 or 6120 single-chip PDP-8 microprocessors. Even those chips are hard to find now. And that leads me to my new project.

I am creating a new PDP-8 implementation. The heart of it will be a small microcontroller (ARM) that is programmed to emulate the PDP-8 as accurately as I can make it. That means with the same input and output system and devices, as well as instruction set. I intend it to run at the same performance level normally. I hope to create an Omnibus (the PDP-8e bus) interface that will allow connecting actual PDP-8 peripherals. I'm not sure exactly what all features it will include, but I plan to make it as complete as reasonably possible. I will make all the code and schematics available in case anyone else wants to build one.

So, go and Google the PDP-8 to see what a wide array of stuff is on the web about this machine. Then come back and follow along my journey creating this new relic. It should be fun...

My PDP-8 project page.

Then the transistor got common and (relatively) cheap, and like skirts at the same time, computers started getting smaller. Digital Equipment Corporation (DEC) was founded in 1957 with the intention of making computers. The founders had just come from MIT and Lincoln Labs where they had played important roles in developing some early small computers. But the venture capitalists wouldn't let them build computers right away, insisting they build logic modules like they had used in the earlier computers. It was perceived that the market was too small, but the logic modules were in demand.

After two years they finally built their first computer, the PDP-1, using those same logic modules. Indeed, the logic modules sold well and were used in several DEC computers. The name PDP comes from Programmed Data Processor; again, the venture capitalists thought the term computer was too menacing.

The PDP-1 was well received. It was much more affordable than other computers at only $120,000. Most others cost $1,000,000 or more. But more importantly it was "friendly." A user could sit down at it and have it to himself (women were rare.) It had some interesting devices attached, like a video monitor, that could be used for nifty stuff. Perhaps the first video game, Spacewar, was created on the PDP-1.

With this first success, DEC moved forward with a series of new machines. Inspired by their own success and some other interesting machines, especially the CDC-160 and the Linc, they decided to build a really small and inexpensive machine: the PDP-8. The PDP-8 was a 12 bit computer, where all their previous machines except the PDP-5 were 18 bits. It would be another four or five years before making the word size a power of 2 (8, 16, 32 bits) would become common. Computer hardware was expensive and a minimum number of bits would cut the cost dramatically. It also created limitations. But the PDP-8 was the right machine at the right time, probably from the right company. It sold for a mere $18,000. Up to then a production run of 100 machines was considered a success. The PDP-8 would go on to sell around 300,000 over the next fifteen to twenty five years.

The original PDP-8 led to successors. The PDP-8L (lower cost), the PDP-8S (serial), the PDP-8I (integrated circuits), and then the PDP-8e. There were more models after that, but it seems the PDP-8e was the defining machine. It was considerably higher performance than its predecessors and much smaller. It was offered in a tabletop cabinet that would fit on a researcher's lab table. Many were used for just such purposes. It was more reliable as well, being made from newer Medium Scale Integration (MSI) integrated circuits that fit many more functions on a chip. Reliability is directly linked to the number of parts and pins used, and the 8e made both much smaller. There were very few new features added to later models. The 8e was in some ways the epitome of the 8 series. To some degree it defined the minicomputer class.

As I mentioned, the 8 was a 12 bit computer. That sounds odd today, but wasn't then. Twelve bit, 18 bit, and 36 bit computers were rather common, and there was even a 9 bit machine that was one of the inspirations for the PDP-8. Twelve bits allowed it to directly address 4 Kilowords of RAM ( 4096 12 bit words, or 6 Kilobytes.) That was the normal amount sold with the PDP-8. It was magnetic core memory and horrendously expensive. With a memory controller it could be expanded to 32 KWords. The processor recognized eight basic instructions: AND, TAD (two's complement add), DCA (deposit and clear accumulator), ISZ (increment and skip on zero), JMP (jump to new address), JSR (jump to a subroutine and save the return address), IOT (input output transfer), and OPR (operate). The last one is really interesting and is actually a whole family of instructions that didn't access memory directly. OPR was "microprogrammed" meaning that several bits in the instruction had specific meanings and caused different operations to happen. They could be combined to effectively execute several operations with one instruction.

So the PDP-8 was a very interesting, if minimal, machine. And it was very popular and very important in the history of computers. Many individuals collect them and keep them. Alas, I do not have one. Some people have re-created them either from original schematics or by using FPGAs or the 6100 or 6120 single-chip PDP-8 microprocessors. Even those chips are hard to find now. And that leads me to my new project.

I am creating a new PDP-8 implementation. The heart of it will be a small microcontroller (ARM) that is programmed to emulate the PDP-8 as accurately as I can make it. That means with the same input and output system and devices, as well as instruction set. I intend it to run at the same performance level normally. I hope to create an Omnibus (the PDP-8e bus) interface that will allow connecting actual PDP-8 peripherals. I'm not sure exactly what all features it will include, but I plan to make it as complete as reasonably possible. I will make all the code and schematics available in case anyone else wants to build one.

So, go and Google the PDP-8 to see what a wide array of stuff is on the web about this machine. Then come back and follow along my journey creating this new relic. It should be fun...

My PDP-8 project page.

Subscribe to:

Posts (Atom)