The automobile. How has it affected your life? Can you imagine what our lives would be like without it? The subject of books and movies and songs. The sketches and dreams of teenage boys. Do you remember getting behind the wheel the first time? Remember what it felt like the first time you drove alone? The freedom and excitement of

finally having your driver's license? For roughly about a hundred years the automobile has been a fixture of daily life in most of the world. But the times they are a changin'.

"1919 Ford Model T Highboy Coupe". Licensed under CC BY-SA 3.0 via Wikimedia Commons - http://commons.wikimedia.org/wiki/File:1919_Ford_Model_T_Highboy_Coupe.jpg#/media/File:1919_Ford_Model_T_Highboy_Coupe.jpg

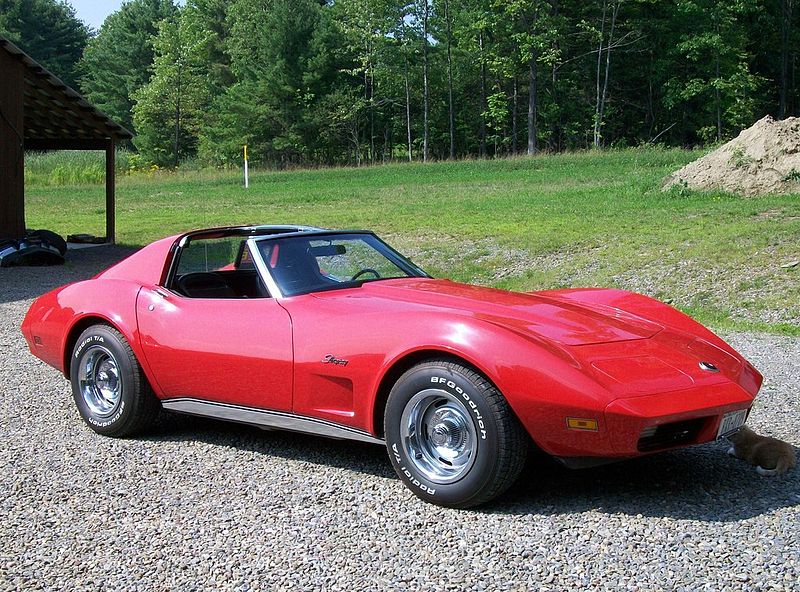

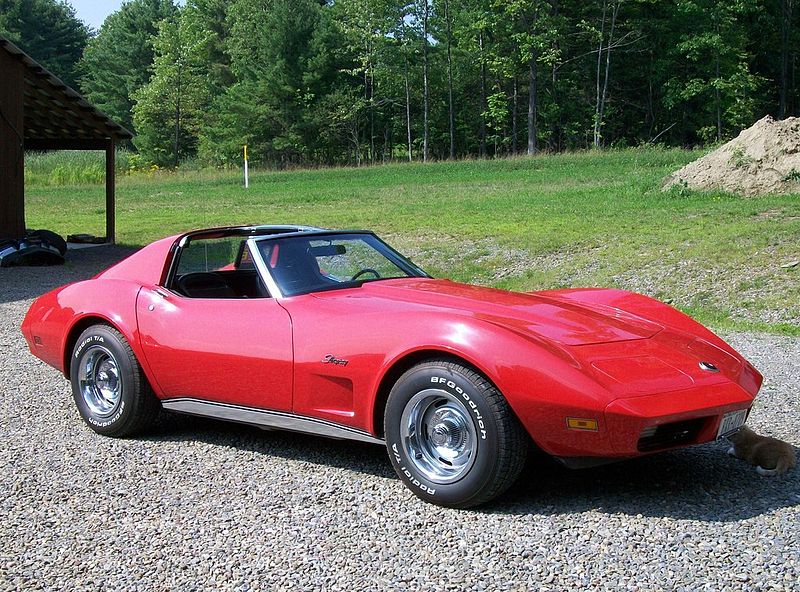

If you compare the 1919 model T in the picture above to most any common car of the late 1970s, there aren't many major differences. Both would most likely have an internal combustion engine burning gasoline, connected to the wheels through a gearbox and mechanical linkage. Of course the newer model would have more refined technology and some creature comforts like air conditioning and power steering and brakes, but the technology is nearly identical. When you push the accelerator pedal on either one a mechanical linkage would open a valve to allow more air and fuel into the engine. The brake pedal would similarly have a mechanical or hydraulic linkage to the brakes on the wheels. Pretty simple and straightforward. For about sixty years the automobile didn't change much from a technological standpoint.

Much more refined, but technologically nearly the same.

"74 Corvette Stingray-red" by Barnstarbob at English Wikipedia. Licensed under CC BY-SA 3.0 via Wikimedia Commons - http://commons.wikimedia.org/wiki/File:74_Corvette_Stingray-red.jpg#/media/File:74_Corvette_Stingray-red.jpg

But what is in your garage now? Much of the technology is probably the same. It probably still has an internal combustion engine. But a lot is new and the new is taking over quickly. If you are still making payments on your car, it probably has more computers in it than existed in the world in 1953 when the Corvette was introduced. Those computers are leading a revolution in automobile engineering, as we shall see.

"An ECM from a 1996 Chevrolet Beretta- 2013-10-24 23-13" by User:Mgiardina09 - Own work. Licensed under CC BY-SA 3.0 via Wikimedia Commons - http://commons.wikimedia.org/wiki/File:An_ECM_from_a_1996_Chevrolet_Beretta-_2013-10-24_23-13.jpg#/media/File:An_ECM_from_a_1996_Chevrolet_Beretta-_2013-10-24_23-13.jpg

Computers in cars started off in the

engine control unit (ECU). Engine parameters like timing and fuel-air ration need to be changed for best efficiency, performance, and pollution as the operating environment change. The ECU replaces a lot of complex mechanical contraptions that still were less than optimal. Now it is quite likely that your accelerator pedal is not connected directly to the fuel supply. Rather, it is probably an input to the ECU. The ECU considers your placement of the accelerator, and even the rate of change of placement, as a request. It considers your request in conjunction with all the other parameters it monitors to determine how much fuel to send to the engine and when. It probably does a lot more, too. And as time goes by it takes over more and more of your driving.

What about the brakes? They probably still have the same hydraulic system they had in 1979. But it has been augmented with a computer too. Do you have

anti-lock brakes? In an anti-lock brake system a computer monitors the brake pedal and wheel rotation when you are braking. If it detects that a wheel is stopping before it should, indicating an impending skid, it will release pressure on the brake of that wheel. To do that requires that the computer have some way of controlling the pressure on the brake for that wheel. Modern systems have evolved to do more than prevent skids. They now enhance stability and traction as well. So again, your pressure on the brake pedal becomes something of a request to the braking system. The computer now has control of the brake at each wheel and the messy, heavy, and space-consuming hydraulic lines become almost redundant. In the future we will likely see more electronic brakes, where the wheel cylinder is actuated electrically by the computer, doing away with much or all of the hydraulic system.

What about safety systems? Many new cars have rear-looking cameras in addition to the standard mirrors so that the driver can see what is behind the car, especially when backing up. In addition, obstacle sensors are becoming more common to warn of an impending collision with something the driver may not be able to see. Since all these systems are computerized, and the various computers in the car are linked by a network, it is possible to control the brakes and engine to stop the vehicle without the driver having to take action. Systems have also been developed to detect when the vehicle is about to leave the lane or road it is traveling in and warn the driver to take action.

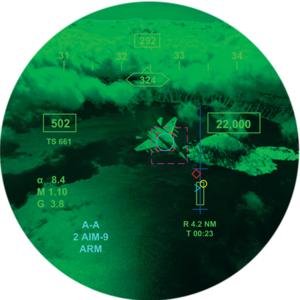

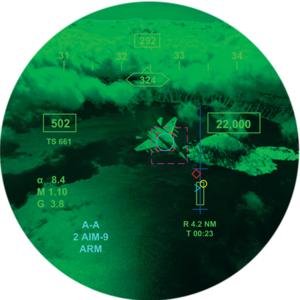

And then there are communication and navigation aids. Many new cars have some sort of cellular or Internet connection. These are sometimes used to call for help automatically in the event of an accident, or manually request assistance for various troubles. Navigation aids allow the driver to enter a destination and get turn-by-turn directions to get there, taking current traffic and obstacles into account. One possible future development that I think has a lot of merit is taken from modern aircraft. In many modern aircraft, especially military, there is either a

Head Up Display (HUD) or

Helmet Mounted Display(HMD), or both. These systems provide a transparent overlay in front of the operator's field of view that provides real-time data overlaid on what the operator actually sees. That data can be the typical data displayed on the dashboard. My wife's 1995 Pontiac Grand Prix had a simple Head Up Display that projected most of the dash information onto the windshield, allowing the driver to get that information without having to look away from the road. But how about if instead of your navigation system speaking to you to give directions, it instead overlays a line on the screen showing the exact route you need to take as you drive? Current military systems are large and expensive, but automotive requirements are more lax and easier to meet at low cost. No helmet is required: Google Glass or an Oculus Rift would probably provide a working solution combined with a navigation system. Mass production would bring the cost way down, making it available to almost all car owners. I hope we don't need weapons integration, which helps keep the cost down as well.

A night vision scene through a modern Helmet Mounted Display

http://jhmcsii.com/features-and-benefits/

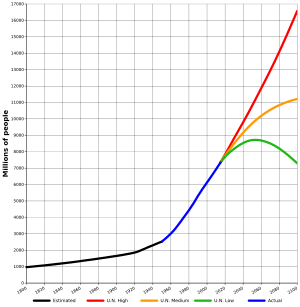

The common thread through all of this has been that technology is improving safety, performance, and comfort by taking more responsibility away from the driver. But so far, the system has been limited to assisting a single driver of a single car. What happens when the cars start communicating between themselves and with the roadways? We already have the Google self driving car which works mostly alone, driving on the road with human drivers. According to

Wikipedia, cars are the largest cause of injury related deaths in the world. Most accidents are caused by poor human judgement. With a computer in charge, the human factor is removed. If all the cars are networked together and with the roadway and traffic signals there can no longer be poor human judgement involved. Although it is unlikely that accidents would be completely eliminated, they would become much less common. The reduction in loss of human life or injury and of property damage would be dramatic.

Driverless Car

"Jurvetson Google driverless car trimmed" by Flckr user jurvetson (Steve Jurvetson). Trimmed and retouched with PS9 by Mariordo - http://commons.wikimedia.org/wiki/File:Jurvetson_Google_driverless_car.jpg. Licensed under CC BY-SA 2.0 via Wikimedia Commons - http://commons.wikimedia.org/wiki/File:Jurvetson_Google_driverless_car_trimmed.jpg#/media/File:Jurvetson_Google_driverless_car_trimmed.jpg

The technology of actually propelling the vehicle is changing as well. Fully electric cars aren't very common yet, but Tesla Motors has proven their viability. There are several models available. Replacing a large, heavy, complex internal combustion engine and drivetrain with two or four simple electric motors on the wheels has great benefits. It also reduces pollution and dependence on the limited oil supplies. More common now, though, is the hybrid vehicle. It combines electric assistance with an internal combustion engine to reduce fuel use. Look around. You will probably see several hybrids close by, and maybe even an electric car. Electric is the wave of the future.

The changes in automotive technology over the last thirty five years have been dramatic and far reaching. Computer and electronic technology has led that change, as with many other fields. In the not-distant future, what we know as the automobile will not look much like what we grew up with. Other than having four wheels, it is likely to be completely different. What will it look like? I don't think anyone can definitively answer than, but I have some predictions.

Let's look twenty years into the future. Limited oil supplies and pollution concerns will make the internal combustion engine a small niche market. It will become nearly impossible to buy a standard passenger car with anything other than electric power. That car will drive itself, perhaps with some possible input from the "driver." It won't be driving alone, though. It will be communicating and negotiating its actions with all the nearby cars and with the roadways themselves. A car won't proceed through an intersection until the intersection gives it permission. It will know where all the other cars around it are, and where it is safe to go without hitting one. Since it will be much safer and much less likely to be involved in an accident, many of the safety features we know now will go away or decline in prominence. That will allow the car to be lighter and roomier without becoming any larger. Communication will overtake control of the car as the dominant feature set. Costs will likely come down since most of the technology will be electronic, which historically as rapidly decreases in cost. The materials used will be less and of lighter and cheaper construction. The soccer mom in the mini van will be a lot less common: the kids can get to the game on their own since no driver will be required.

The automobile has changed the face humanity drastically over the last hundred years. But the changes coming now will be in some ways even more dramatic. I've made my predictions, but only time will tell how it actually turns out. I'm interested to hear what you have to say about it all. Leave a comment below.

Where are we headed? Wherever we end up.